In part 1 we created a simple photo gallery application which provided a good introduction to Arc. Today we’ll expand on our application by adding the ability to upload multiple files at a time and swap out local storage for AWS S3.

If you’d rather grab the completed source code directly rather than follow along, it’s available on GitHub, otherwise let’s get started!

Getting started

If you’ve been following along you can continue with the code from part 1, or you can grab the part 1 code from GitHub.

Clone the Repo

If cloning:

Terminal

git clone -b part-1 https://github.com/riebeekn/phx-photo-gallery.git photo_gallery

cd photo_galleryLet’s create a branch for today’s work:

Terminal

git checkout -b part-2And then let’s get our dependencies and database set-up.

Terminal

mix deps.getTerminal

cd assets && npm installNow we’ll run ecto.setup to create our database. Note: before running ecto.setup you may need to update the username / password database settings in dev.config to match your local postgres settings, i.e.

/config/dev.config …line 69

# Configure your database

config :photo_gallery, PhotoGallery.Repo,

username: "postgres",

password: "postgres",

database: "photo_gallery_dev",

hostname: "localhost",

pool_size: 10Terminal

cd ..

mix ecto.setupWith all that out of the way… let’s see where we are starting from.

Terminal

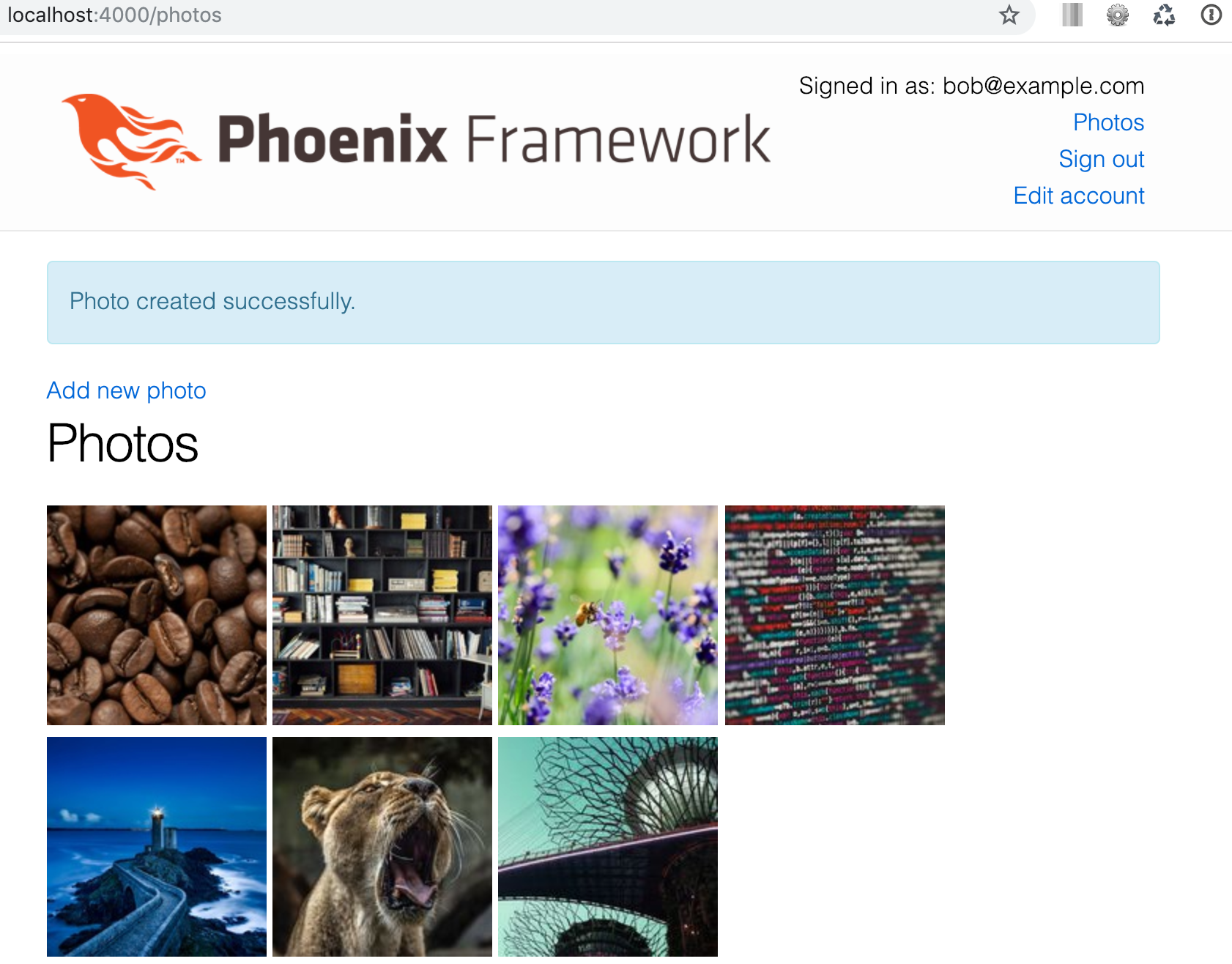

mix phx.serverIf we navigate to http://localhost:4000/, sign in and navigate to our photos page we’ll see our basic application.

Nothing fancy, but it gets the job done. The first thing we’ll tackle today is adding the ability to upload multiple files at a time.

Uploading multiple files at a time

One minor annoyance with our current implementation is that users need to upload their files one photo at a time. What if someone has 20 or 30 photos to upload… it’s going to get very tedious if they are required to upload these files one by one.

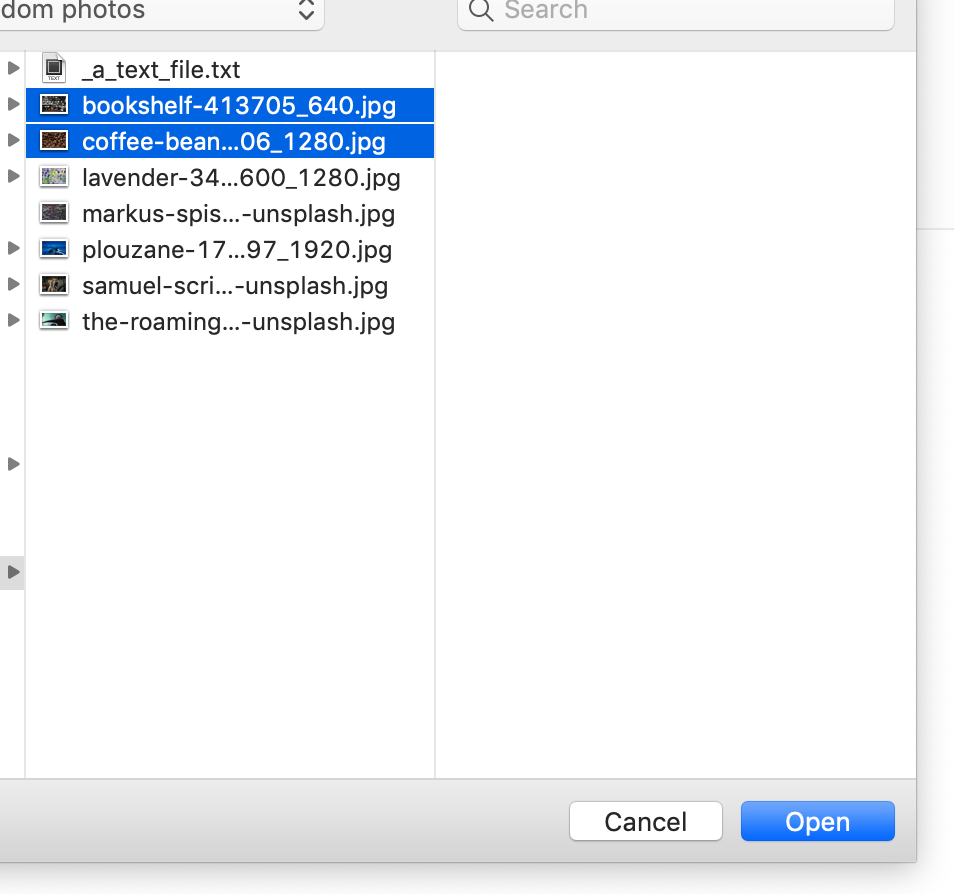

So let’s implement multiple uploads.

The first change we need to make is to update form.html.eex to accept multiple files. This is very simple, we just need to add multiple: :true to the file_input control.

/lib/photo_gallery_web/templates/photo/form.html.eex

<%= form_for @changeset, @action, [multipart: true, as: :photos], fn f -> %>

<%= if @changeset.action do %>

<div class="alert alert-danger">

<p>Oops, something went wrong! Please check the errors below.</p>

</div>

<% end %>

<%= label f, :photos %>

<%= file_input f, :photos, [multiple: :true] %>

<%= error_tag f, :photos %>

<div>

<%= submit "Upload" %>

</div>

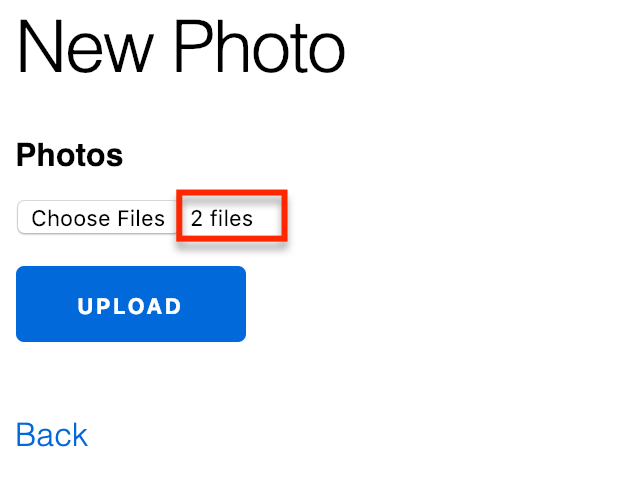

<% end %>We’ve also updated the form_for tag to include an as attribute. This is due to the fact that our multiple files aren’t backed by an actual model field.

Next we need to update our controller code. In the controller we’ll simply call into a new context method create_photos that we will be creating shortly. We’ll also update the success message.

/lib/photo_gallery_web/controllers/photo_controller.ex …line 19

def create(conn, %{"photos" => photo_params}) do

with :ok <- Gallery.create_photos(conn.assigns.current_user, photo_params) do

conn

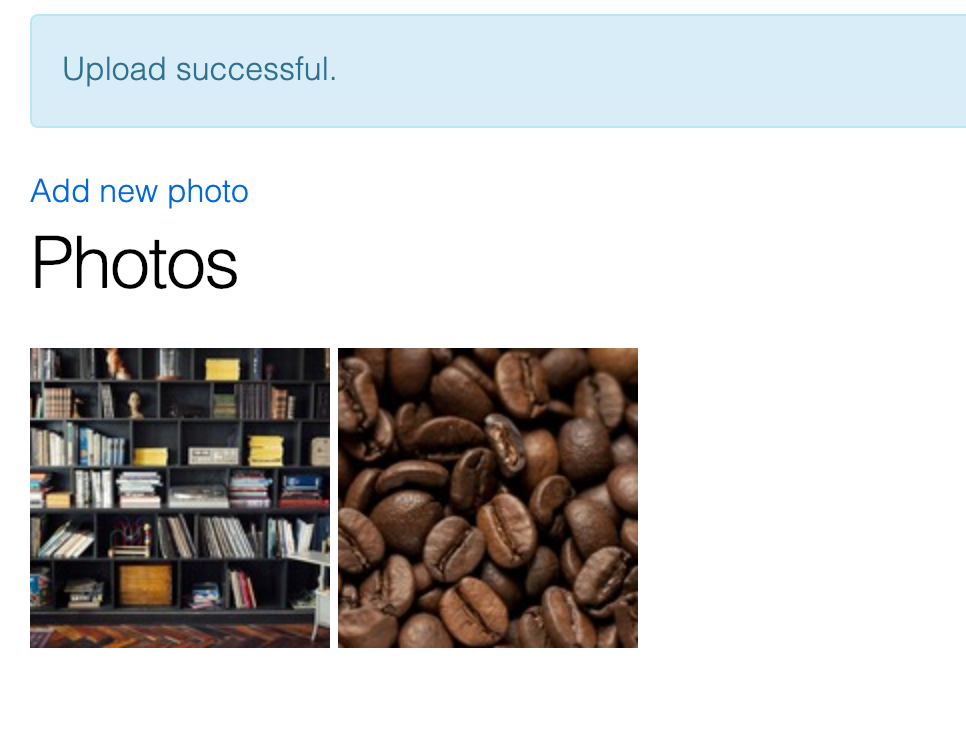

|> put_flash(:info, "Upload successful.")

|> redirect(to: Routes.photo_path(conn, :index))

end

endSo now for that context method. This will be simple; we will just iterate over the photos and call out to the existing create_photo method passing in each photo.

/lib/photo_gallery/gallery/gallery.ex …line 16

def create_photos(%User{} = user, attrs \\ %{}) do

Enum.each attrs["photos"], fn p ->

create_photo(user, %{"photo" => p})

end

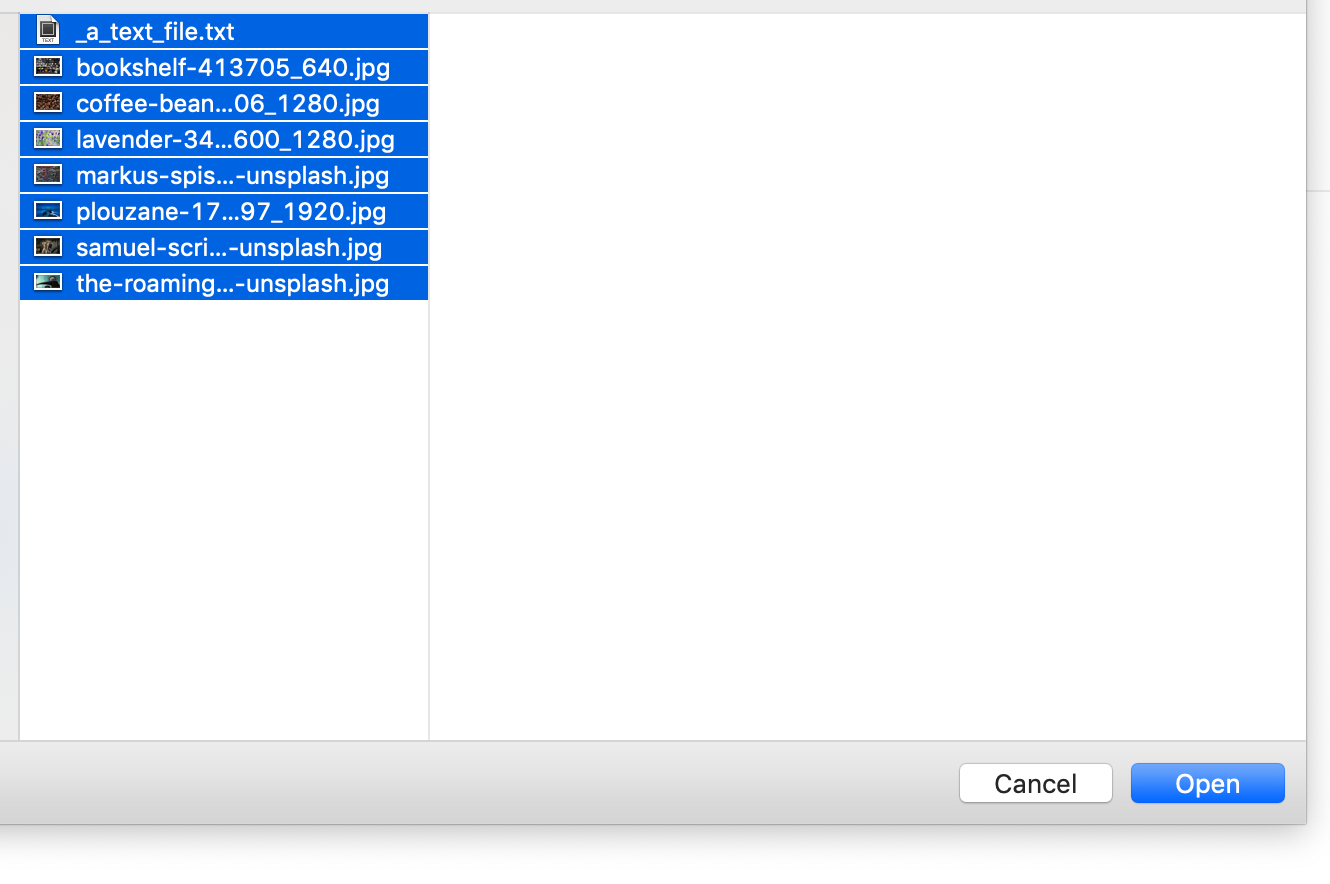

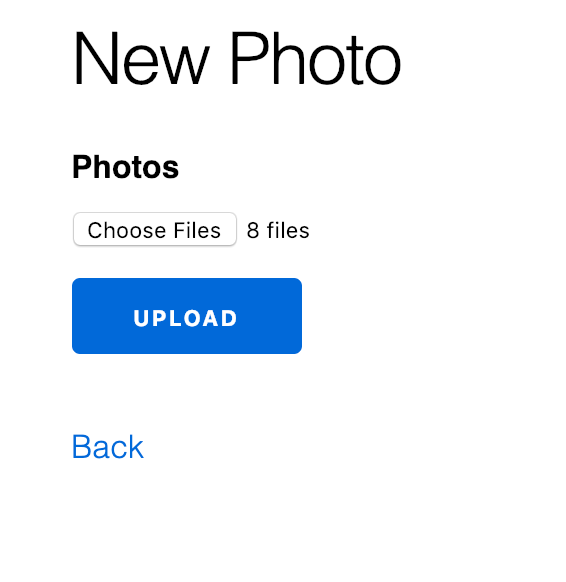

endSweet! We can now select and upload multiple images.

Handling large file sizes

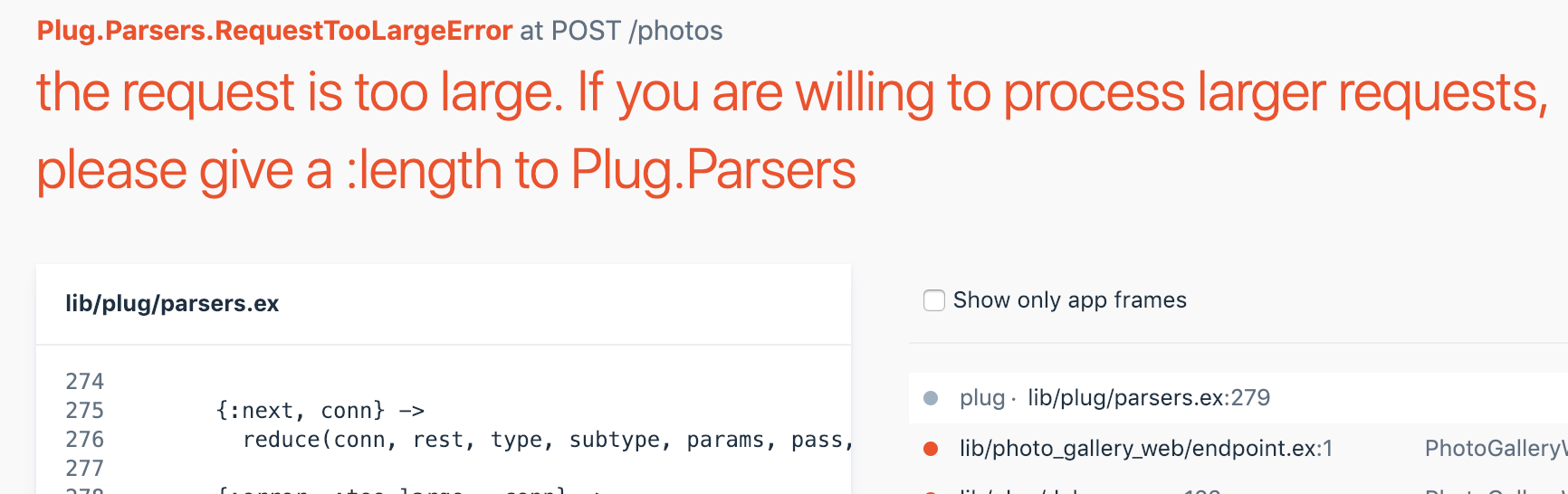

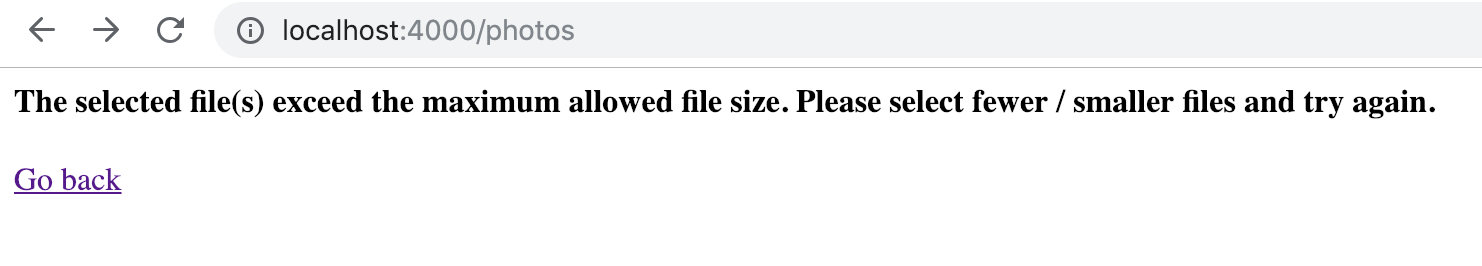

Let’s see what happens if we select a bunch of fairly large files.

We get an error! So we want to do a few things when this scenario presents itself. We’ll want to provide a decent message to the user, and we also want to increase the maximum request size (the default is 8MB).

Improving the error message

Let’s start with the error message. To view what our end users are going to see, we need to switch debug_errors to false in dev.config.

/config/dev.config …line 9

config :photo_gallery, PhotoGalleryWeb.Endpoint,

http: [port: 4000],

debug_errors: false,

...

...With any configuration change we need to restart the server.

Terminal

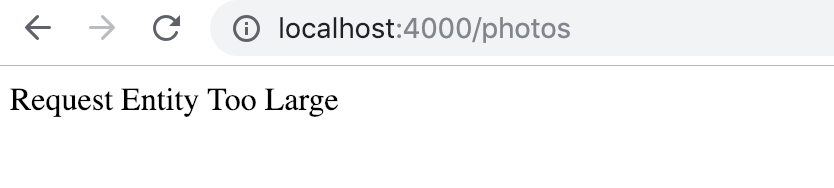

mix phx.serverIf we now attempt our file upload again, we’ll see the following.

Not exactly a friendly error! We won’t spend time creating a pretty error page, but let’s at least improve on things a bit.

We can customize the error shown for a particular status code by creating a template in /templates/error with a name of the status we want to customize.

So in our case we’ll create a 413.html.eex file.

Terminal

mkdir lib/photo_gallery_web/templates/error

touch lib/photo_gallery_web/templates/error/413.html.eexWe’re just going to create a very simple page.

/lib/photo_gallery_web/templates/error/413.html.eex

<h4>The selected file(s) exceed the maximum allowed file size. Please select fewer / smaller files and try again.</h4>

<%= link "Go back", to: Routes.photo_path(@conn, :new) %>And with that, we end up with a bit more user friendly situation.

We should revert our dev.config and restart the server now that we’ve got our error message figured.

/config/dev.config …line 9

config :photo_gallery, PhotoGalleryWeb.Endpoint,

http: [port: 4000],

debug_errors: true,

...

...Increasing the maximum request size

To facilitate large files and multiple uploads we’re also going to increase the maximum request size. This is done in endpoint.ex.

/lib/photo_gallery_web/endpoint.ex …line 34

plug Plug.Parsers,

parsers: [:urlencoded, {:multipart, length: 200_000_000}, :json],

pass: ["*/*"],

json_decoder: Phoenix.json_library()We’ve added length: 200_000_000, this will increase our request size to 200 MB. With this change we can upload our previously too large photos all in one go… fantastic!

That does it for implementing multiple file uploads, next let’s swap out our local storage for AWS S3.

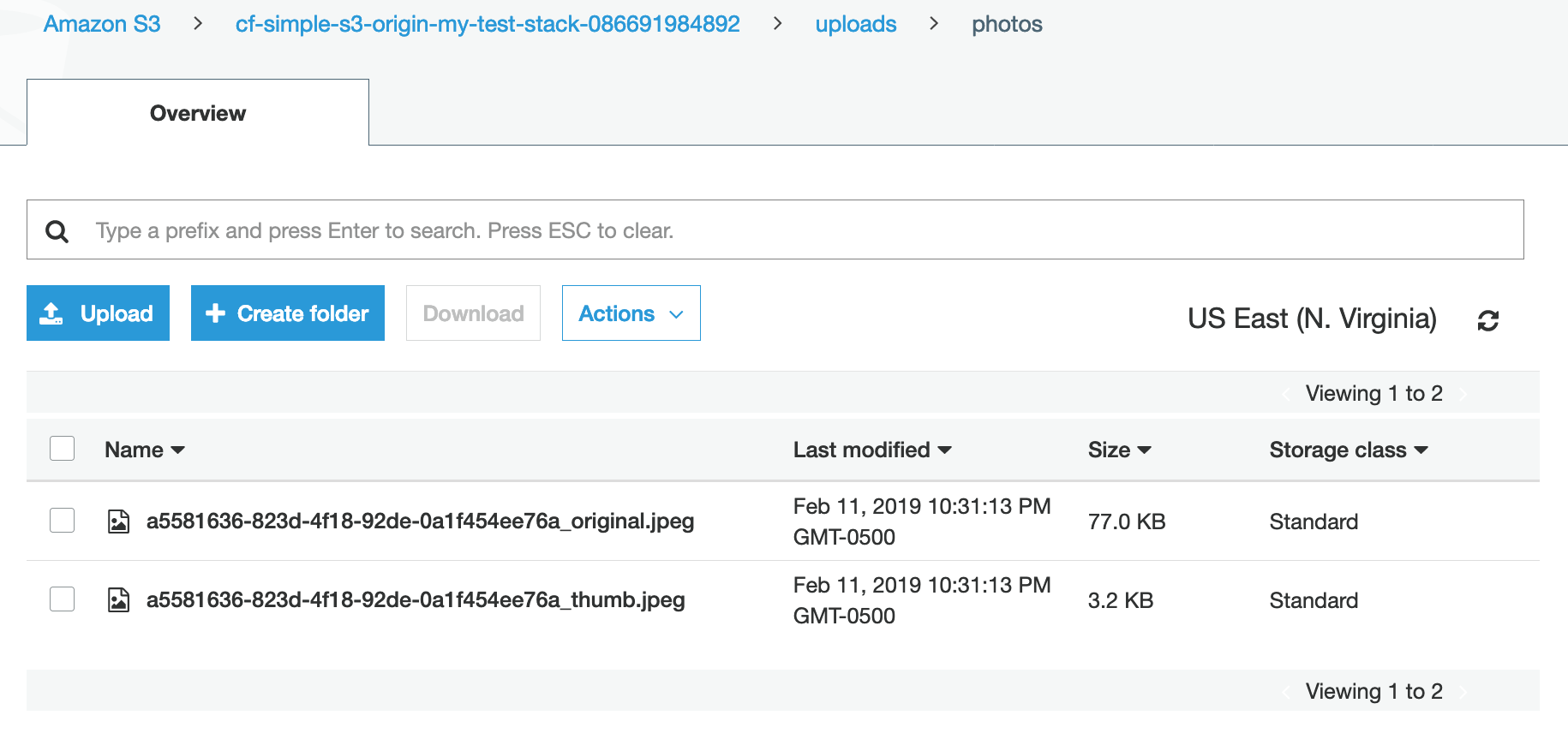

Storing our files on AWS S3

Often in production we’re going to want to use something like AWS S3 for file storage. It is pretty easy to implement this using Arc.

This section assumes you have already set up a bucket on S3 and a CloudFront distribution. If you haven’t yet done so, check out this post for instructions on doing so.

You might also want to delete any images you have currently uploaded as these will not be valid after we switch over to S3.

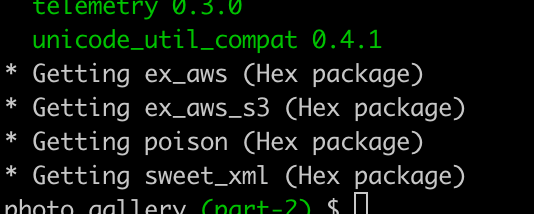

Adding dependencies and updating the Arc configuration

The first step to getting things setup is to add a few new dependencies in mix.exs.

/lib/mix.exs …line 34

defp deps do

[

{:phoenix, "~> 1.4.0"},

{:phoenix_pubsub, "~> 1.1"},

{:phoenix_ecto, "~> 4.0"},

{:ecto_sql, "~> 3.0"},

{:postgrex, ">= 0.0.0"},

{:phoenix_html, "~> 2.11"},

{:phoenix_live_reload, "~> 1.2", only: :dev},

{:gettext, "~> 0.11"},

{:jason, "~> 1.0"},

{:plug_cowboy, "~> 2.0"},

{:pow, "~> 1.0.0"},

{:bodyguard, "~> 2.2"},

{:arc, "~> 0.11.0"},

{:arc_ecto, "~> 0.11.1"},

{:ex_aws, "~> 2.0"},

{:ex_aws_s3, "~> 2.0"},

{:hackney, "~> 1.6"},

{:poison, "~> 3.1"},

{:sweet_xml, "~> 0.6"}

]

endWe’ve added 5 new dependencies starting with ex_aws, let’s update our dependencies.

Terminal

mix deps.get

We now need to update our Arc configuration.

/config/config.exs …line 39

# Arc config

config :arc,

storage: Arc.Storage.S3,

bucket: {:system, "S3_BUCKET"},

asset_host: {:system, "ASSET_HOST"}

config :ex_aws,

access_key_id: [{:system, "AWS_ACCESS_KEY_ID"}, :instance_role],

secret_access_key: [{:system, "AWS_SECRET_ACCESS_KEY"}, :instance_role],

region: "us-east-1"Note you may need to change the region value above depending on the region of your bucket. See this document for the various region codes.

We’re using a number of environment variables in the configuration, so we need to set these somewhere.

We’ll accomplish this via an .env file. Since this file will contain sensitve information we don’t want it checked into source control, it’s very important we add it to our .gitignore file. So let’s do that first.

/.gitignore …line 44

# ignore the file uploads directory

/uploads

# do not check .env into source control

.envNow let’s create the actual file. When creating configuration files that won’t be checked into source control, I like to also create a template version of the file. This means when grabbing the source code it should be fairly easy for someone to see what configuration values need to be set. So we’ll start by creating the template file.

Terminal

touch .env.template/.env.template

export S3_BUCKET="YOUR-BUCKET-NAME"

export AWS_ACCESS_KEY_ID="YOUR-ACCESS-KEY"

export AWS_SECRET_ACCESS_KEY="YOUR-SECRET-KEY"

export ASSET_HOST="YOUR-CLOUDFRONT-DIST-URL"Now we’ll copy the file to create the actual .env file.

Terminal

cp .env.template .envNow replace the values in the .env file with your actual bucket name, key, secret and cloudfront distribution. This might look something like:

Whenever we make a change or addition to our .env file we need to run source .env to load the values, so let’s do that now.

Terminal

source .envUpdating the uploader module

One final change to get S3 working is to change our uploader module to use public_read as the ACL (access control list). This will allow our application to access the images and display them.

/lib/photo_gallery_web/uploaders/photo.ex

defmodule PhotoGallery.Photo do

use Arc.Definition

use Arc.Ecto.Definition

@extension_whitelist ~w(.jpg .jpeg .gif .png)

# To add a thumbnail version:

@versions [:original, :thumb]

# Set permissions to public read for uploaded files

@acl :public_read

...

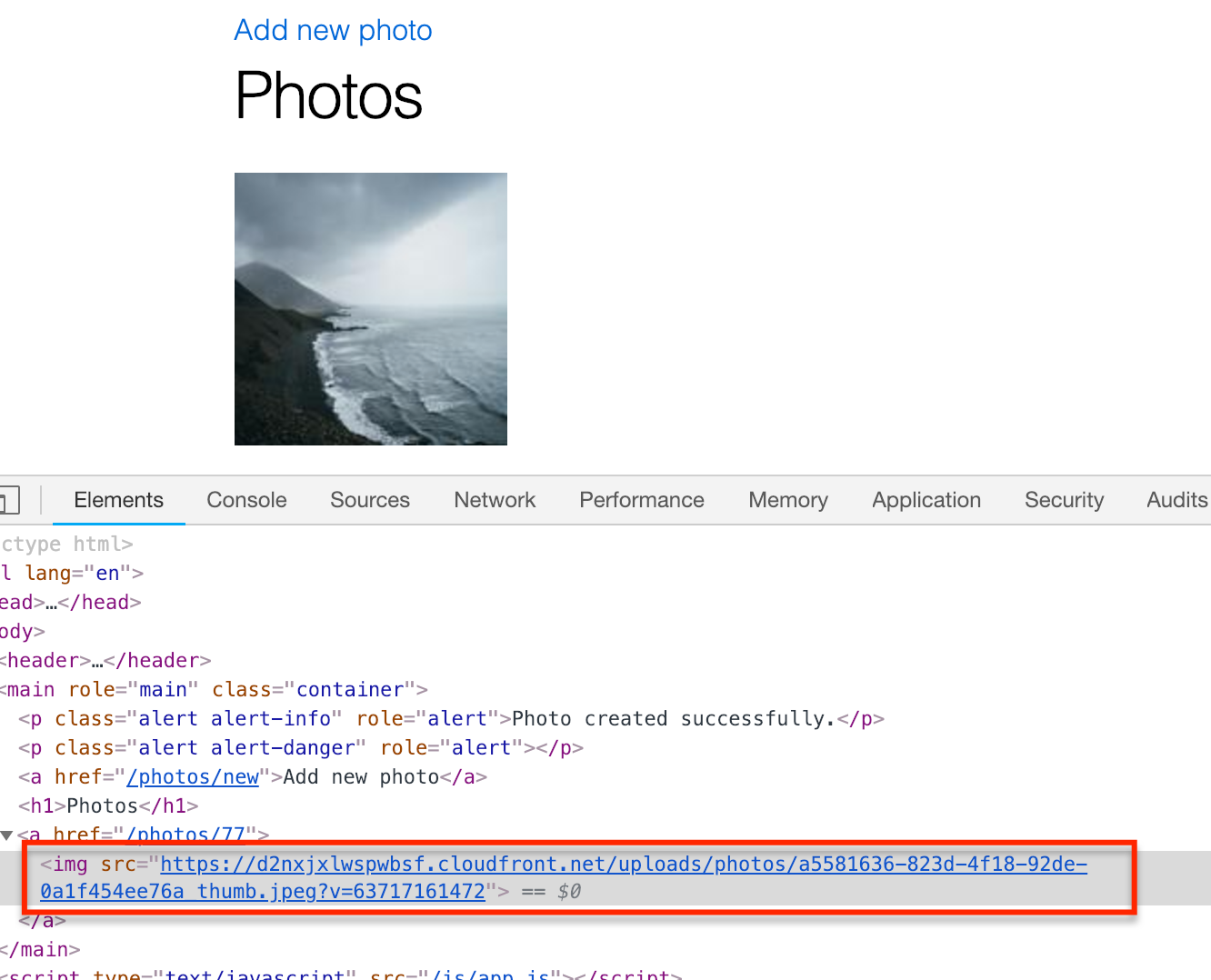

...With all the above in place when we fire up our application it should work as before… but we’ll see our images are being uploaded to S3.

Likewise when we delete an image it will be removed from S3.

Also note that our image URL’s are using our CloudFront distribution.

So that’s it! AWS… done!

Changing up the Arc configuration

One final task before ending for the day. We likely don’t want to be uploading and downloading files from S3 when we’re developing. Therefore I think we should move the Arc configuration into environment specific configuration files.

So remove the current Arc configuration from config.exs and place it in prod.exs.

/config/prod.exs …line 69

# Arc config

config :arc,

storage: Arc.Storage.S3,

bucket: {:system, "S3_BUCKET"},

asset_host: {:system, "ASSET_HOST"}

config :ex_aws,

access_key_id: [{:system, "AWS_ACCESS_KEY_ID"}, :instance_role],

secret_access_key: [{:system, "AWS_SECRET_ACCESS_KEY"}, :instance_role],

region: "us-east-1"

# Finally import the config/prod.secret.exs which should be versioned

...And then in dev.exs and test.exs we’ll add an Arc configuration pointing at local storage.

/config/dev.exs …line 77

# Arc config

config :arc,

storage: Arc.Storage.Local/config/test.exs …line 20

# Arc config

config :arc,

storage: Arc.Storage.LocalNow when we run our application in development or against a test suite our file uploads will be handled locally.

Summary

So this concludes our tour of Arc. I feel it provides a convenient way of handling file uploads and is pretty easy to setup and integrate into a Phoenix application.

In terms of the application we built; it is sufficient for demonstration purposes but would require some changes for a real world application. A better upload control or at the very least a spinner widget would be desirable as would a better gallery page. Maybe I’ll write up a follow-up post in the future addressing these items… but for now we’re done and dusted!

Thanks for reading and I hope you enjoyed the post!