Continuing on with our DevOps journey; today we’ll be running through some basic set-up steps in preparation for deploying the docker image we created in part 1 to AWS.

The assumption is you already have an AWS account set-up, if not, you’ll want to sign up for an account before proceeding.

Manual set-up steps

Before diving into Terraform and actually deploying our application, we have a few one time set-up steps to address:

- Create an AWS access key.

- Create an ECR repository for the docker image we created in part 1 .

- Upload the docker image to ECR.

- Create AWS Secrets Manager entries for our

secret key base,database useranddatabase passwordvalues. - Create a key-pair so that we can SSH into our server on AWS once it has been provisioned.

Let’s get at it!

Create an AWS access key

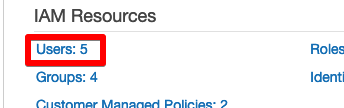

In order to interact with AWS we’re going to need an access key. We can create an access key via the IAM console.

After logging into the AWS Console just search for and navigate to the IAM home page.

Then click on Users.

On the Users page select Add User.

I’ll create a temporary user for this post, we want to select programmatic access for the user.

We’ll make this user an Administrator, this can be done by creating / selecting a group with Administrator Access.

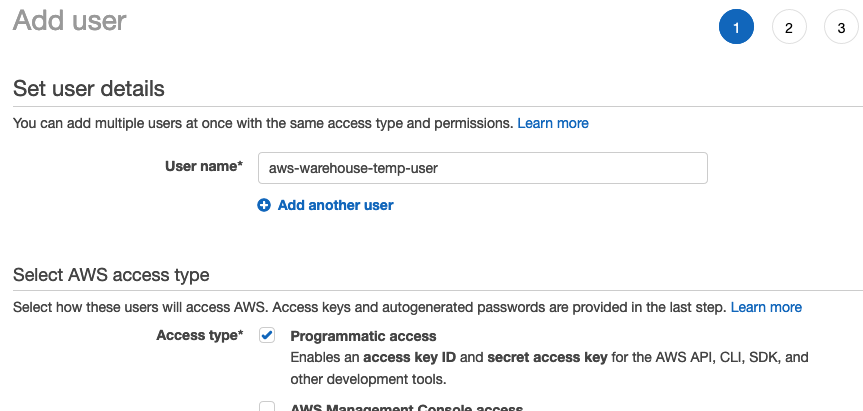

Click Next on the tags screen and create the user.

Download the .csv file containing the access key / secret credentials as we’ll need these values in the future.

And with that we are ready to create the ECR repository.

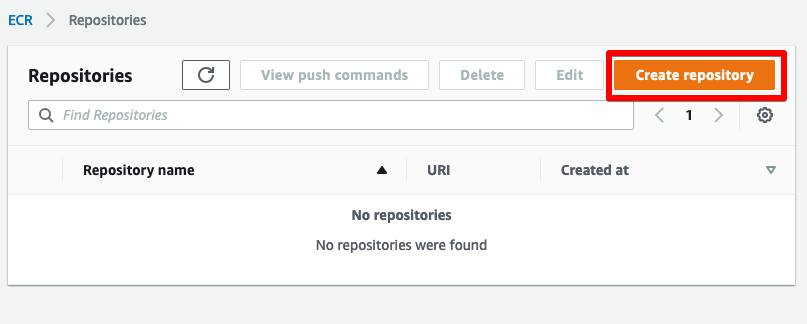

Create an ECR repository

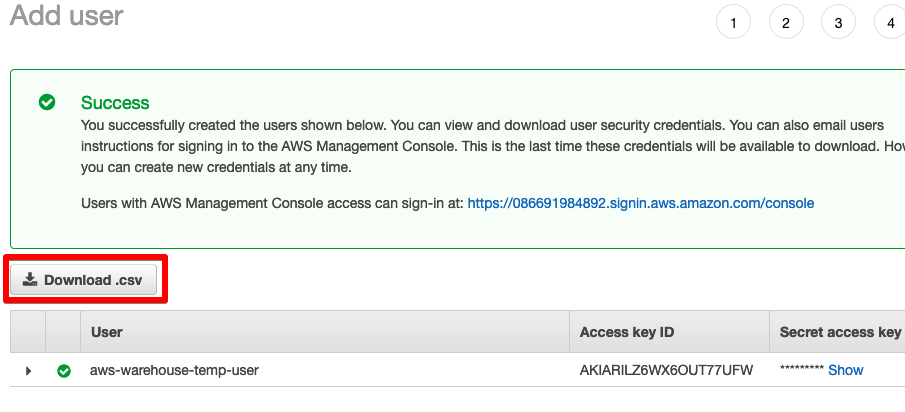

ECR is similar to DockerHub in that it provides a place to store docker images.

In theory we could create the repository via Terraform, but I’m not sure it makes much sense to do so as it’s really a prerequisite of the deployment, so we’ll just create the repository manually. It’s a very simple process, after logging into the AWS Console just search for and navigate to the ECR home page.

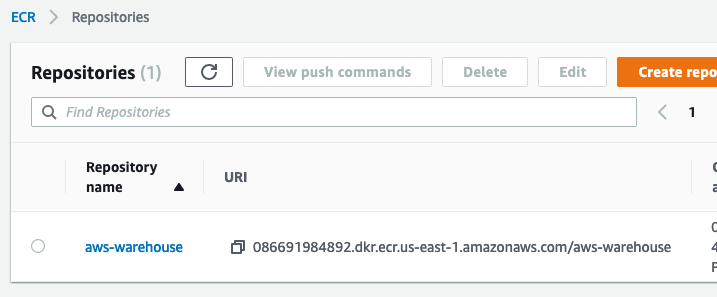

From the ECR home page, select Create repository and then select a name for the repository, we’ll use aws-warehouse.

We now have a repository for our docker image.

Upload the docker image

Let’s tag and upload the docker image we created in part 1. You’ll need to have the AWS CLI installed in order to upload the image to ECR. If you’re on MacOS, you may want to use Homebrew to install the CLI… it’s been awhile since I’ve had to install it, but I believe Homebrew was the easiest option.

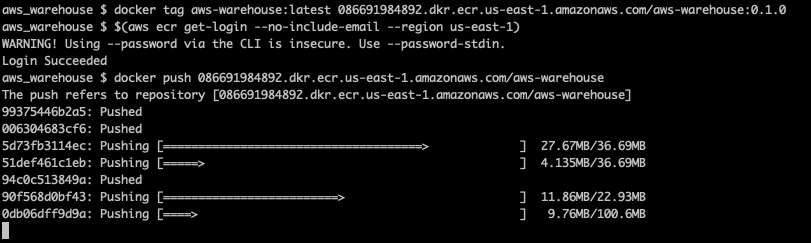

Terminal

docker tag aws-warehouse:latest <ECR URI>/aws-warehouse:0.1.0

$(aws ecr get-login --no-include-email --region us-east-1)

docker push <ECR URI>/aws-warehouseYou’ll need to replace <ECR URI> with the actual value for your ECR repository, i.e in my case it would be:

Terminal

docker tag aws-warehouse:latest 086691984892.dkr.ecr.us-east-1.amazonaws.com/aws-warehouse:0.1.0

$(aws ecr get-login --no-include-email --region us-east-1)

docker push 086691984892.dkr.ecr.us-east-1.amazonaws.com/aws-warehouseNote: you’ll also need to update the region value if you are using a region other than us-east-1.

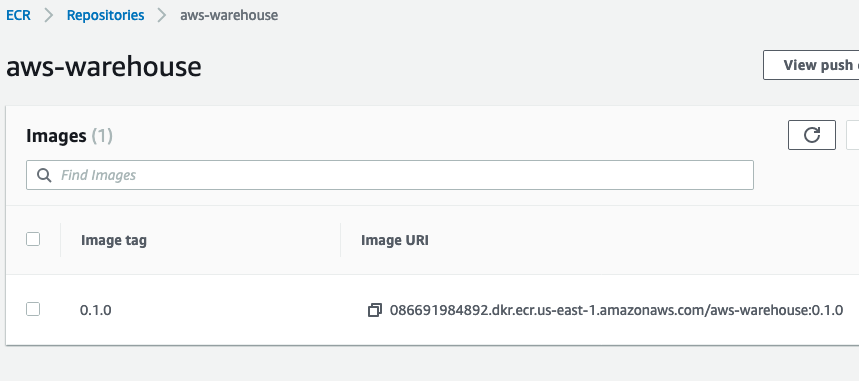

After the image has finished uploading, you’ll see it in the ECR repository.

Now that the docker image has been uploaded, the next step is to securely store sensitive environment settings for our application. We’ll do so with AWS Secrets Manager.

AWS Secrets Manager

If you recall from part 1 we have a number of run time settings which we are passing to our docker image. We could specify these directly in our deployment scripts, but that would present a potential security issue. We don’t really want the secret_key_base, database user or database password values stored in plain text in our deployment scripts.

There are a number of solutions to this, sometimes these values are stored / managed with the CI process / tool being used, but in our case we’ll use AWS Secrets Manager.

Click Store a new secret.

Then select “Other type of secrets” and add the secret_key_base. You’ll want to generate the value by running mix phx.gen.secret. You can use any key you want, but for following along it will be easiest if you use the same key as me for all the secrets, i.e. in this case SECRET_KEY_BASE.

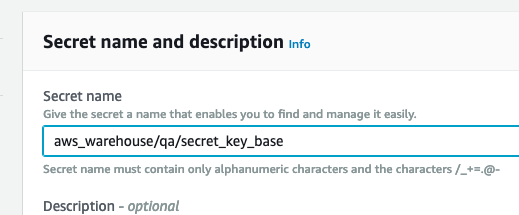

We’ll pretend we are creating a QA environment, so we’ll name the secret appropriately (aws_warehouse/qa/secret_key_base).

Click next, and then next again on the following screen (keeping the defaults, i.e. don’t enable auto rotation), and click Store on the following page.

Follow the same process for the database user and database password fields. I used the following values, and again it will be easiest if you use the same key and name values:

| KEY | VALUE | NAME |

|---|---|---|

| DB_USER | qaWarehouseDbUser | aws_warehouse/qa/db_user |

| DB_PASSWORD | 7N4kVT9Y0CelRHpCpyqp | aws_warehouse/qa/db_password |

After adding the above, you should have the following three AWS secrets.

Secrets done! One final step and we’re done with our prerequisites.

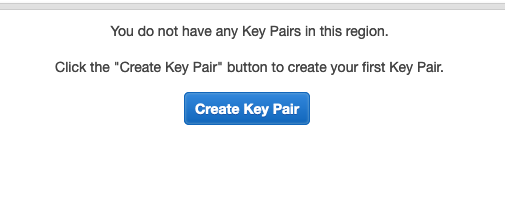

Create a key pair

Creating a key pair will allow us to SSH into our AWS server once it is provisioned. This can be handy for inspecting the docker logs or just in general trouble-shooting issues.

Creating a key pair is simple, just navigate to EC2.

Select key pairs:

And create and download a new key pair.

Make note of where the .pem file is located on your local machine. You’ll need it, should you want to SSH into the server once it is provisioned.

Summary

That does it for prerequisites, a pretty short post today, next time out will be more exciting, we’ll finally be deploying our application… see you then!

Thanks for reading!